Galera is a multimaster MySQL cluster that provides virtually synchronous replication by certifying so called "write-sets", which ensures that all database transactions are committed on all cluster nodes. The software is developed and maintained by Codership.

This article is a compilation from material targeting the Galera cluster in general, mixed with insights gained in Codership training sessions. We hope it sharpens the readers understanding of the clustering topic for MySQL and its variants such as MariaDB.

The Adfinis team is more than happy to support you, if your organization needs to run highly available MySQL workloads and you'd like to get some helping hands in planning, building and running such a service.

In comparison to traditional leader/follower replication setups, a Galera cluster promises increased availability and the ability to read and write from/to any cluster node. However, replacing a single server database backend with a Galera cluster is not necessarily transparent to the application, thus, cannot always be the recommendation. Before replacing a traditional database setup with a clustered one, it is crucial to be aware of the changed inter-node isolation levels and limitations in order to estimate the potential impact on workloads. Generally speaking, the isolation and order of transactions will be be affected by the replication protocol. Compared to a response from a single node server, the application might not always get back the expected answer and break. For instance, if results for repeated queries made on separate cluster nodes don't provide the same results or a read query is performed directly after a write query (read-after-write) and the read query is executed on another node.

Often times, proxies or query routers are installed in front of the cluster direct application traffic to the correct nodes. This mimics the behavior of a single database server. However, these components should be built in a highly available manner as well, which increases the cost and complexity of the entire solution.

Automated failover mechanisms are usually applied sparingly in traditional replication architectures, because data consistency is at risk in split-brain scenarios. Fortunately, these mechanisms are superfluous with Galera cluster due to the multimaster capability. Also, additional cluster nodes can increase the fault tolerance at the node level rapidly. The overall availability at the cluster level depends on the amount of available failure domains, which are mostly limited by the number of datacenters for on-premise deployments.

We have seen in the previous discussion above, that failures on cluster level are harder to mitigate with limited resources, especially in locally constrained environments. Luckily, the cloud allows us to distribute workloads across availability zones easily in a cost efficient manner. When nodes are distributed across fault domains, deployments in the cloud can improve the clusters availability from the start, without spending too much thought about resiliency at the cluster level. Galera Manager is a solution that accelerates the logistics around clusters and nodes on AWS. Additionally, it can be used to monitor existing clusters.

The automatic deployment of Galera nodes using the Galera Manager is primarily tailored to deployments on AWS and is still in the beta phase as of today.

We hope to see support for other deployment targets as well. Having a cloud agnostic deployment tool for Galera would greatly increase platform independence as one could easily proivision a database cluster among different cloud providers and greatly reduce the vendor lock-in of the "database as a service" offerings from major cloud providers.

Because information on deploying Galera clusters on AWS is widely available, this article elaborates on cluster deployments using Linux containers (LXC) and the integration of so called "unamanged" nodes, which is only briefly discussed in the documentation upstream.

If you want to test how the Galera Manager works locally before you do your first deployments in the cloud, we have explained the necessary steps below. The Galera Manager can be installed by invoking the installation script:

root@gm01:~# curl -sO https://galeracluster.com/galera-manager/gm-installerroot@gm01:~# chmod +x gm-installerroot@gm01:~# ./gm-installer installINFO[0000] OS Detected: Debian / Ubuntu / Linux / bionic / 18.04...The installation log is located at /tmp/gm-installer.log

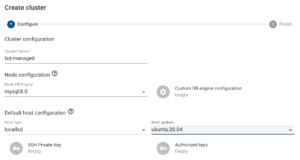

After the installation of Galera Manager, the base configuration for a new cluster can be defined in the Web UI:

Fig. 1: Create a managed Galera cluster

Fig. 1: Create a managed Galera cluster

To deploy the actual nodes as containers, it is recommended to have at least a minimally configured LXD service ready:

root@gm01:~# systemctl start lxd# Initialize LXD with sane default values for testing purposes# https://linuxcontainers.org/lxd/docs/master/#how-do-i-configure-lxd-storage?root@gm01:~# lxd init --auto# Give the Galera Manager daemon (gmd) access to the LXC socket# https://linuxcontainers.org/lxd/docs/master/securityroot@gm01:~# usermod -a -G lxd gmdroot@gm01:~# systemctl restart gmd

The bootstrap script for new Ubuntu nodes assumes that the AppArmor utilities are available in the container. This assumption does not hold true for the default LXC base images which are launched using the Galera Manager. Furthermore, these images don't include rsync, which will be needed for the state snapshots transfer (SST) between the nodes. Therefore, we should tweak the image slightly before launching the cluster:

# Ubuntu 20.04 (focal) image download from default remote "images:"root@gm01:~# lxc launch images:ubuntu/focal testCreating testStarting test# Cleanup test imageroot@gm01:~# lxc delete -f test# Show fingerprint of the imageroot@gm01:~# lxc image ls -cf --format csvc3e80efdcd15# Unpack the rootfs of the imageroot@gm01:~# cd /var/lib/lxd/images/root@gm01:/var/lib/lxd/images# unsquashfs c3e80efdcd15823ef2f372955915f94f65a24a0444e5c32dada6a72ba6e31cd8.rootfs# Prepare chrootroot@gm01:/var/lib/lxd/images# mount -o bind /dev squashfs-root/dev/root@gm01:/var/lib/lxd/images# mkdir -p squashfs-root/run/systemd/resolveroot@gm01:/var/lib/lxd/images# mount -o bind /run/systemd/resolve/ squashfs-root/run/systemd/resolve# Enter the image chroot and install the missing utilitiesroot@gm01:/var/lib/lxd/images# chroot squashfs-rootroot@gm01:/# apt install rsync apparmorexit# Cleanup mountsroot@gm01:/var/lib/lxd/images# for m in $(mount | grep squashfs-root | awk {'print $3'}); do umount $m; done# Create new rootfs imageroot@gm01:/var/lib/lxd/images# mksquashfs squashfs-root squashfs-root.rootfs# Backup original rootfsroot@gm01:/var/lib/lxd/images# mv c3e80efdcd15823ef2f372955915f94f65a24a0444e5c32dada6a72ba6e31cd8.rootfs{,.bck}# Replace current rootfs of the image with the patched rootfsroot@gm01:/var/lib/lxd/images# mv squashfs-root.rootfs c3e80efdcd15823ef2f372955915f94f65a24a0444e5c32dada6a72ba6e31cd8.rootfs

Admittedly, this process seems esoteric, but unfortunately there exists only a limited selection of base images in the Galera Manager without the option to download from a specific LXC remote. A setting to specify an exact LXC image would greatly simplify this process.

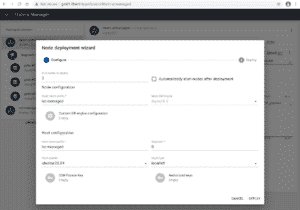

Afterwards, the (Centos or Ubuntu based) LXC container nodes can be added to the cluster in a convenient fashion:

Fig. 2: Automatically deploy LXC container nodes with the Galera Manager

Fig. 2: Automatically deploy LXC container nodes with the Galera Manager

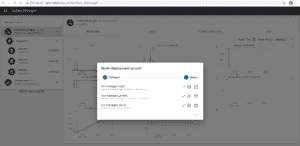

Follow the cluster setup process in the Browser UI or via the Galera Manager log files: root@gm01:~# tail -f /var/log/gmd/host-*

Fig. 3: Galera LXC node deployment status

Fig. 3: Galera LXC node deployment status

Existing clusters can be integrated with the Galera Manager as "unmanaged" nodes. This running mode assumes that the initial configuration and deployment of the node already happened before the node was registered in the Galera Manager. Also, in comparison to the LXC containers from the last section, these types of nodes are not configured automatically (i.e., unmanaged). But don't fret. The database administrator training course offered by Codership is designed to equip administrators with everything needed to deploy, configure, join and maintain cluster nodes manually. Feel free to contact us to get more information about the available trainings.

For sake of simplicity, we will simply reuse the suggested MySQL configuration from the previously provisioned LXC containers as a base configuration. Also, we will again install the most "pristine" version of the "Codership" Galera 4 cluster using the original MySQL 8 as the base version.

For the manual setup on unmanaged Ubuntu 18.04 virtual machines, follow the excellent documentation upstream:

root@galera-n:~# cat /etc/apt/sources.list.d/codership.list# Codership Repository (Galera Cluster for MySQL)# https://galeracluster.com/library/documentation/install-mysql.htmldeb https://releases.galeracluster.com/mysql-wsrep-8.0/ubuntu bionic maindeb https://releases.galeracluster.com/galera-4/ubuntu bionic mainEOF# Receive the repository signing key from the keyserverapt-key adv --keyserver keyserver.ubuntu.com --recv BC19DDBA# Non interactive install of Codership Galera components,# latest Galera 4 on top of the MySQL 8 base versionexport DEBIAN_FRONTEND=noninteractiveapt update && apt-get install galera-4 galera-arbitrator-4 mysql-wsrep-8.0 -y

Disable AppArmor for the MySQL daemon for testing purposes with the unmanaged virtual machines (running their own kernel) as advised in the recommendations upstream:

root@galera-n:~# ln -s /etc/apparmor.d/usr.sbin.mysqld /etc/apparmor.d/disable/root@galera-n:~# apparmor_parser -R /etc/apparmor.d/disable/usr.sbin.mysqldroot@galera-n:~# systemctl stop mysql.service

Next we are ready to apply some initial Galera configuration to our unmanaged nodes:

root@galera-n:~# cat > /etc/mysql/mysql.conf.d/mysqld.cnf# Listen on all interfacesbind-address = "0.0.0.0"# WSREP Optionswsrep_on = ONwsrep_provider = "/usr/lib/libgalera_smm.so"wsrep_cluster_name = "unmanaged-01"wsrep_node_name = "$HOSTNAME"wsrep_cluster_address = "gcomm://galera01,galera02,galera03"wsrep_node_address = "$HOSTNAME"wsrep_sst_method = "rsync"EOF

Note that the above commands on the Galera nodes galera-n are usually run with an infrastructure automation tool of choice, such as Ansible.

To bootsrap the primary component of the unmanaged cluster from any node, run:

root@galera-01:~# mysqld_bootstrap

The remaining nodes can be joined by starting the mysql daemon:

root@galera-n:~# systemctl start mysql.service

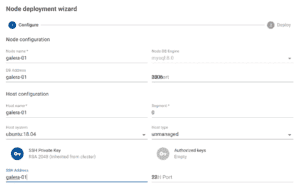

To integrate the Ubuntu based cluster VMs into the Galera Manager, simply define a new cluster and add them as "unamanged" nodes through the UI:

Fig. 4: Add unmanaged nodes to the Galera Manager

Fig. 4: Add unmanaged nodes to the Galera Manager

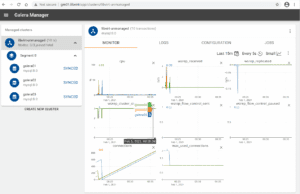

Finally, the manager presents us with a default dashboard and allows us to add more graphs for certain variables in order to monitor the health of the cluster.

Fig. 5: Monitor unmanaged nodes with Galera Manager

Fig. 5: Monitor unmanaged nodes with Galera Manager

We briefly introduced monitoring with Galera Manager above, but there is actually a lot more to it. As we have seen, the manager provides you a perfect overview of important cluster variables. However, in reality you probably need to go far more into details on additional topics regarding backup, cluster recovery, monitoring, logging, performance tuning, write-set cache size calculation, additional wsrep options, different Galera cluster version and more. We're more than happy to help you with this and bring in the Adfinis expertise. We mange critical infrastructure since more than 20 years and have a team of highly skilled engineers who are happy to assist you in planning, building and running a Galera cluster. We hope this article was able to provide an overview of some deployment strategies for test-driving Galera clusters with or without Galera Manager (unmanaged nodes). If you are curious to find out more about the latest improvements in the most recent release of Galera, feel free to contact us or head over to the official announcement/changelog for Galera 4 on MySQL 8. Among others, it includes exciting changes regarding:

Lastly we would like to refer again to the high quality video material and documentation freely available on the Codership page.

Adfinis offers managed services for all kind of technologies that run on Linux. Be it on-premise, in the cloud or in a mixed environment. We are interested to hear about your requirements and are eager to find out how we can help you in planning, building and running services for you, so your team can concentrate on the core business of your organization. Contact us now or find out more about our managed services. If you'd like to make a deep dive into the Galera topic on your own we can also recommend to check the Codership online trainings which are offered as classes via Zoom, led by professional instructors guiding through installation and configuration of the Galera cluster.

Link to the training course: Galera transaction isolation guarantees: Migrate to Galera cluster: Galera Manager documentation: Manual Galera cluster installation: Disable AppArmor for Galera cluster installations: Galera 4 for MySQL 8 Announcement: Video material and full documentation:

We use cookies to ensure you get the best experience on our website. By using our site, you agree to our cookie policy.