News

Das Neuste aus der Welt von Adfinis SyGroup

Der Open Source Dienstleister Adfinis und Snyk, führender Anbieter von Security-Lösungen für Entwickler:innen, schliessen eine Partnerschaft. Als Vertriebs- und Integrationspartner von Snyk bringt Adfinis die bei Entwickler:innen und Sicherheitsteams gleichermassen beliebte Plattform für Anwendungssicherheit zu Kundinnen und Kunden in der Schweiz, Holland und Australien.

Snyk ist die Plattform, die Entwickler:innen wählen, um cloud native applications sicher zu erstellen. Sie ermöglicht es mit IDE- und SCM-Integrationen noch während des Programmierens nach Schwachstellen zu suchen. Diese können so schnell und frühzeitig automatisch behoben und die daraus gewonnen Erkenntnisse direkt umgesetzt werden. Dadurch kann die Einführung neuer Schwachstellen in die Codebasis, durch neue Abhängigkeiten und Codeänderungen, verhindert werden. Mit Berichten und Warnungen wird sichergestellt, dass der Überblick über die Sicherheitslage der Anwendung in allen Entwicklungsteams gegeben ist. Die Verwaltung ist durch unternehmensweit definierte und angepasste Sicherheits- und Lizenzrichtlinien, sowie kontextbezogene Priorisierung möglich.

Dadurch ermöglicht Snyk Entwickler:innen die umfassende Integration von mehr Sicherheit. Relevante Anwendungs- und Sicherheitsinformationen werden in eine SaaS-Plattform integriert, wobei die Produkte sowohl die Anforderungen von älteren und neueren Systemen, einschliesslich Open Source, Container und Infrastructure as code erfüllen. Damit bietet Snyk Sicherheit über den cloud native application stack hinweg und ermöglicht die Sicherung aller Komponenten einer modernen nativen Cloud-Anwendung.

Snyk wird bereits von Millionen von Entwicklern und mehr als 1’200 Kunden eingesetzt, darunter Asurion, Google, Intuit, MongoDB, New Relic, Revolut und Salesforce. Die Kunden und Nutzer von Snyk haben in den letzten 12 Monaten mehr als 300 Millionen Tests durchgeführt, sowie mehr als 30 Millionen Sicherheitslücken innerhalb der letzten 90 Tage behoben. In der Schweiz zählt Snyk Helvetia zu seinen Kunden. Die Schweizer Entwickler:innen von Helvetia schätzen an Snyk vor allem die Qualitätssicherung während der Entwicklung. Kürzlich konnte Snyk eine Finanzierungsrunde in Höhe von 530 Millionen Dollar, bei einer Bewertung von 8.5 Milliarden Dollar, abschliessen. Durch das Investment werden die Produktinnovation und -entwicklungen von Snyk weiter vorangetrieben. Einige davon werden diese Woche auf der kostenlosen jährlichen Entwicklerkonferenz von Snyk, der SnykCon 2021, vorgestellt. Auf der SnykCon werden neue Erweiterungen für die Anwendungssicherheits-Plattform, neue Workflow-Integrationen, sowie verbesserte und neue Funktionen präsentiert.

“Als Open Source Dienstleister waren wir auf der Suche nach einer Lösung, die sich insbesondere im Umfeld von offenen Technologien bewährt und den Security und Compliance Anforderungen unserer Kunden Rechnung trägt. Snyk passt hervorragend in diese Lücke und ergänzt unser Portfolio um ein wichtiges Puzzle-Teil im Bereich DevSecOps.” Nicolas Christener, CEO & CTO Adfinis AG

Über Snyk

Snyk, der Marktführer für Anwendungssicherheit, unterstützt Entwickler weltweit dabei, sichere Anwendungen zu entwickeln, und stattet Sicherheitsteams für die Anforderungen der digitalen Transformation aus. Bei Snyk stehen die Entwickler im Mittelpunkt, und Unternehmen können somit alle kritischen Anwendungskomponenten, vom Code bis zur Cloud, sicher gestalten. Dies ermöglicht eine effizientere Arbeit für Entwickler und bringt mehr Umsatzwachstum und grössere Kundenzufriedenheit, bei gleichzeitigen Kosteneinsparungen und einer verbesserten Sicherheit. Die Plattform für Anwendungssicherheit von Snyk lässt sich nahtlos in den Arbeitsablauf von Entwicklern integrieren und wurde speziell für die Zusammenarbeit von Sicherheits- und Entwicklerteams entwickelt. Snyk wird aktuell von 1.200 Kunden weltweit genutzt, darunter Branchenführer wie Asurion, Google, Intuit, MongoDB, New Relic, Revolut und Salesforce. Snyk wird in den Forbes Cloud 100 2021 und den CNBC Disruptor 50 2021 aufgeführt und wurde im Gartner Magic Quadrant 2021 für AST als Visionär eingestuft.

The general availability of the Key Management Secrets Engine in Vault 1.7+ent or higher facilitates the creation, management and deployment of symmetric and asymmetric cryptographic keys from HashiCorp Vault to remote keystores. Currently, this enterprise engine supports the Azure Key Vault as a target Key Management System (KMS), but ships beta support for AWS KMS already. The engine is part of the Vault Enterprise Advanced Data Protection (ADP) offering and useful for enterprises required to control the lifecycle of cryptographic keys (creation, distribution, rotation, revocation, etc.).

Use Vault >= 1.7.2+ent. It includes a fix for a panic error that occurred on audit logging while reading keys from the engine.

The engine increases the portability of cryptographic keys, because the secret key can be distributed to remote key stores while protecting the original key source in Vault. Prevalent security modules or keystores keep secret key material locked tight. For instance, the Azure Key Vault does not support export operations on the private key, i.e., allows exporting the public part of the key material only, not the actual secret. In this sense, the Vault Key Management Secrets Engine represents a holistic solution to generate, maintain and transport secret cryptographic material.

This post captures a few thoughts on how the Key Management Secrets Engine can meet the requirement for secure cryptographic keys of your business. For technical details on how to use the Key Management Secrets Engine refer to HashiCorp learn, the official documentation for the secrets engine and the corresponding API documentation page.

Contact us to explore how Vault can be implemented in your workflows and environment to protect your applications key and cover your secret management needs.

Why to Use Your Own Key

Use the terminology with a grain of salt. The content on which this blog post is based evolved during the last year and was adapted frequently to changes in the upstream documentation.

The Key Management Secrets Engine opens the door for a plenary of interesting application scenarios. Among others, it allows the distribution of cryptographic keys to Azure Key Vault. The keys in Azure Key Vault can then be consumed by a myriad of downstream applications:

- Encryption at rest for Azure VM Disks: Azure Disk Encryption (ADE) or Server-Side Encryption (SSE) for Azure Disks

- Encryption at Rest for Azure Storage (Blob, Files)

- Bring Your Own Key (BYOK) for Azure Azure Information Protection (AIP)

Clearly, the Vault Key Management Secrets Engine is beneficial for applications that use the keys stored in the remote key management systems. For example, keys in Azure Key Vault can be used to protect data in Snowflake (data warehouse). With the “tri-secret secure” Snowflake enterprise feature, Snowflake encryption keys can be combined with a BYOK key to create a composite master key for data protection. Thus, data can no longer be decrypted if either one of the keys is revoked, the key in Azure Key Vault originating at HashiCorp Vault or the key maintained by Snowflake.

The Key Management Secrets Engine also enables some use cases which might not be obvious on first sight and lead back to Vault as downstream consumer. For instance, the engine can be used to manage and keep in charge of cloud keys which are used to unseal other Vault clusters (i.e., backup of the actual cloud auto-unsealing keys and not just the recovery keys).

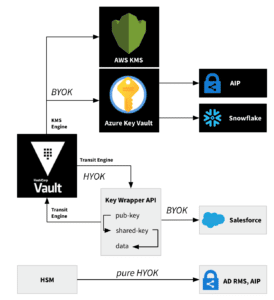

Procedures for the distribution of customer-managed keys to cloud environments (cloud key management or key brokering) can be categorized in Hold Your Own Key (HYOK) or Bring Your Own Key (BYOK) solutions.

Fig. 1: HYOK, BYOK or mixed use cases with the HashiCorp Vault Key Management Secrets Engine

The two abbreviations or terms are easily confused and flexed to a certain degree. In a mixed HYOK/BYOK scenario, the remote service requests the key material from HashiCorp Vault. The request is initiated at the consumer side (pull mode). The request can be directed immediately to Vault (e.g, authenticated with the Vault JWT backend) or intercepted by an intermediary API, such as the Distributey key wrapper. No Key Management Secrets Engine is needed in this situation, because the Vault Transit engine is sufficient to implement a scenario where the remote service fetches the key material from Vault. This mixed model with an intermediary API sources the keys from the Vault Transit engine. It proved useful in situations where the remote service cannot retrieve key material from a remote key store supported by the Vault Key Management Secrets Engine.

Depending on the understanding and definition of HYOK, the remote service may not be allowed to read the plain-text user data (data opacity). In this purest form of the HYOK model, the user is in possession of the private key and the raw payload (user data) at all times and actually “owns and holds the key”. As a result, data protected by a local key cannot be automatically encrypted and processed by cloud applications without the users consent. This restrictive form of protection is suitable for very sensitive data that requires no processing in the cloud. The private key is not transferred to the remote service at all, rather, data is encrypted before transmission. However, there exists no agreement on this mental model for HYOK and the term HYOK is therefore not always treated equally across cloud providers. For example, secret key material is cached in the cache-only key service by Salesforce, which was labeled a HYOK solution until recently, whereas Azure insists that the private key remains on premises when using HYOK. Hence, the location of the cache and the cache TTL can make the exact identification of HYOK and BYOK solutions a fuzzy affair. Azure permits the operation of both key paradigms, HYOK and BYOK. It is recommended to use the BYOK paradigm for AIP as well, since the AIP classic client is deprecated and the HYOK approach mainly targeted this classic client.

In contrast to HYOK, the key material is pushed from Vault to the remote service when using BYOK. In other words, there is usually no inbound request from the service provider. In practice, the BYOK approach is easier to implement, because engineering efforts for additional infrastructure (key wrapper) can be neglected. Also, publishing the API of a key wrapper (interface or actual HashiCorp Vault) increases the potential attack surface.

From a legal perspective, the HYOK method is oftentimes favored because of the aforementioned mental image of a pure HYOK implementation. HYOK enjoys a more advantageous wording, and if implemented very restrictively, can be considered superior from a security point of view.

Obviously, the short list of use cases above and the schematic example scenarios in Fig. 1 can never be conclusive. Get in touch with us to explore how Vault can fulfill the key brokering requirements of your specific business case.

Key Wrapping in Transport and at Rest

Independent of the exact model for key distribution (Key Management Secrets Engine or Transit engine combined with on-prem wrapping API), the cryptographic keys are usually exchanged with the industry standard JSON Web Encryption format (JWE, see RFC7516). JWE leverages a combination of symmetric (shared) and asymmetric key encryption to encrypt the key material during transport. The Vault Key Management Secrets Engine abstracts this wrapping process. In other words, wrapping procedures (JWE) are taken care of by HashiCorp Vault when the Key Management Secrets Engine is applied in a BYOK transfer, which clearly is an advantage compared to mixed or pure HYOK style of transfers.

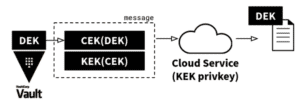

Double encryption, composite or wrapped keys are common techniques to protect the user data in the remote service with a local key from on-premises (the BYOK key). In this way, the trust radius spans multiple parties, since both the key of the data owner and the cloud provider are required to decrypt and process the payload. An example scenario for key transport includes three types of keys:

- Data Encryption Key (DEK): Local target key from HashiCorp Vault which will be used to encrypt the user data. This key can be generated with the Transit or Key Management Secrets Engine, depending on the “own key model” depicted in Fig. 1 and the interoperability capabilities of the remote service with common cloud key stores such as AWS KMS or Azure Key Vault.

- Content Encryption Key (AES CEK): Locally generated key for key wrapping

- Key Encryption Key (RSA KEK): Key pair of the key consumer

Fig. 2: Transmission of a wrapped key to a remote service

The Data Encryption Key (DEK) is the target key material from the Vault Transit engine and first encrypted with an ephemeral AES wrapping key (CEK). In a second step, the CEK is encrypted with the public key of the receiver (KEK). The doubly encrypted keys are both part of the message sent to the remote service. The remote service decrypts the CEK and unpacks the DEK to finally encrypt user data.

An additional key hierarchy in the cloud service can benefit from the same double encryption technique at rest, similar to the one used during transport, to further organize and simplify key management or to improve performance of encryption/decryption operations for large amounts of data.

Different from the general technique of wrapping keys or data within multiple layers of encryption, the Double Key Encryption service is an actual piece of software developed by Microsoft to ensure that user data cannot be encrypted without a local key. Nevertheless, the RSA keys for this local service could also be sourced and managed with the HashiCorp Transit engine.

Similarly to the Distributey key wrapper in a mixed HYOK/BYOK model (see Fig. 1), the request to retrieve key material would also be initiated by the service provider. However, unlike the Distributey key wrapper, the Double Key Encryption service only returns the public key for encryption. This message does not require JWE or wrapping for protection. The purpose of the Double Key Encryption service is different from the Distributey (or any other) key wrapper API, because it is used to decrypt data locally and does not act as a proxy for transferring secret key material.

How to Distribute Keys from HashiCorp Vault to Azure Key Vault

The general process for distributing encryption keys from HashiCorp Vault to Azure Key Vault can be summarized as follows:

1. Enable the Key Management Secrets Engine in Vault

2. Create a named cryptographic key of a specific type (e.g., 2048 bit RSA key)

3. Configure the KMS provider in Vault with the credentials of the Azure service principal

4. Distribute the key from step (2) to Azure Key Vault

Vault Key Management for Azure Information Protection (AIP)

“Azure Information Protection (AIP) is a cloud-based solution that enables organizations to discover, classify, and protect documents and emails by applying labels to content.”

What is Azure Information Protection?

When the Vault Key Management Secrets Engine provisions the tenant root key for Azure Information Protection (AIP), the KEK used to protect the DEK from Vault is derived from an HSM protected Azure Key Vault (premium SKU). The tenant root key for AIP can be rekeyed later on in response to a data breach or changing organizational structures. Compared to a key solely managed by the cloud provider, the key lifecycle is primarily determined by the actions performed in HashiCorp Vault. With a BYOK created in HashiCorp Vault, organizations have more control over key operations compared to a tenant key which is only managed by Microsoft.

The key specifications for the intended target key store and application usage should be carefully planned for. For instance, to construct key material that can later be used for AIP, a 2048 bit RSA key is recommended. Fortunately this type of key is supported by the HashiCorp Key Management Secrets Engine as well. As mentioned previously, it is required to use the premium tier Key Vault backed by an HSM for performing BYOK procedures on Azure Key Vault, because the KEK can not be derived from keys stored in software protected Key Vaults (standard tier). Moreover, the Azure service principal needs the correct Azure access policy on the Azure Key Vault. Finally, the key purpose is not to be confused with the Azure Key Vault access policy. It defines the permitted Azure Key Vault operations and can be specified at distribution time.

To begin exploring HashiCorp Vault with the Transit and Key Management Secrets Engine on your own, start your free trial for Vault Enterprise today and let us know how Adfinis can support your business to keep being in charge of secrets and key material.

You have a vision: you want the development of your infrastructure to be as scalable and agile as the development of your applications. Infrastructure as code will help you achieve that goal. So you’ve found the right tool – Terraform – but is your organization ready for the transformation? We’ll show you the four cultural and organizational elements you need to successfully adopt infrastructure as code.

It goes without saying that to build the foundation for a successful infrastructure as code practice you need the right tool. But do you know how to use that tool properly? And have you thought about how that tool will sit in your IT ecosystem? Which clouds do you want to support with that tool? The answers to these questions will bring you up to speed. However, they won’t make a long-lasting difference until you look at the impact of infrastructure as code on your team and culture.

A shared vision – supported by leadership

Just implementing a potentially successful tool won’t help if you don’t have a vision. You need to know what you’re actually looking to achieve in order to know what tool suits your organization best. Of course, sometimes you just want to jump on the bandwagon. You’ve thought about DevOps and you’ve heard that infrastructure as code is a great first step to embracing this philosophy. But what do you want to achieve?

Or perhaps you’ve got a clear vision of what you want, but have you shared that vision with the rest of the organization? You need a vision that is supported by leadership, so you can have a top-down approach, inspire teams, including the necessary operation engineers, to dive into all things ‘code’ and have all the resources in place to make the transition. Once you’ve got everyone working towards the same goal, it’s time to look at how you can create the right culture.

A testing culture

The right culture is the translation of the infrastructure as code philosophy for your organization. The right culture is built up from different elements. Such as having a testing culture. Testing is a fundamental step for application developers: in order to know what to build and to check whether the feature actually does what it’s supposed to do, they’ll write tests and execute them before the application goes live.

Infrastructure as code is no different from application development: it should do something and once you’ve coded a certain automation, it should do what it was designed for. Makes sense, right? Well, that means you need to think about testing before you code your infrastructure, so you can checkmark your way through your day. It’s oddly satisfying of course, but it’s also a matter of hygiene.

A versioning culture

Since the development of your infrastructure occurs a lot faster today than before, when you were manually building your machines, it’s vital that you know when you did what. We recommend using any type of GIT program to keep track of your versions. GIT doesn’t only help you keep track of who did what, but it also helps you revert to specific versions, just in case something major goes wrong.

A culture of collaboration

What GIT version management also supports is collaboration: code is shared and can be worked on simultaneously. And since all parts of the infrastructure are built and managed with the same code, work can actually be shared or transferred. Which is also great if your engineer decides to leave the company or becomes ill. With GIT, new staff can easily see how the structure is built, what changes were made, and make any corrections.

Of course, change is always difficult. If you’ve been working a certain way for a long time, it’s often hard to see the value in anything else. However, you also need a more agile way of managing your infrastructure and easily scaling up or sizing down any cloud in order to support your business. See it like this: when you’re building the playground, make sure it’s safe and big enough for all the kids to play in. That’s a big deal, because your role saves lives. Bearing this piece of wisdom in mind might make stepping into the unknown a little easier.

And don’t forget – we can help you with just this challenge: how to prepare your organization for the infrastructure as code revolution with Terraform. We offer a Terraform training, tailor-made for your organization. In other words: it doesn’t matter how far you are on your infrastructure as code journey, we’re here to help.

Die Chemie stimmt – Nachdem Adfinis erst im Januar dieses Jahres GitLab “Open” Partner wurde, ist Adfinis bereits im Mai zum “Select” Partner aufgestiegen. Adfinis konnte sich im Markt behaupten und hat die letzten Monate gezeigt, dass eine noch nähere Zusammenarbeit für alle Parteien eine florierende Zukunft verspricht.

GitLab ist die moderne Lösung mit der Entwickler-Teams alle Werkzeuge zur Implementierung von DevSecOps Workflows aus einem Guss erhalten. Egal ob on-premise, via SaaS oder hybrid – GitLab ist die flexible Lösung, mit der Teams schneller an ihr Ziel kommen. Die integrierten Security- und Quality-Scans sorgen nicht erst in nachgelagerten Prozessen für die nötigen Analysen, sondern sind integraler Bestandteil der Arbeitsschritte. Von der Planung, über die Verwaltung des Codes, bis hin zu den CI/CD Pipelines zum Testen und Deployen der Builds bringt Gitlab alles mit, was das Entwickler-Herz begehrt. Mit Gitlab setzen Organisationen auf eine sichere, moderne und intuitive Lösung, mit der die Time-to-Market beschleunigt und das Qualitäts- und Sicherheits-Management gestärkt wird.

Wie Kunden von der neuen Select Partnerschaft profitieren

Mit der Select Partnerschaft hat Adfinis den direkten Zugang zu den GitLab Engineers, wodurch ganz spezifisch auf die Anliegen der Kunden eingegangen werden kann und Wartezeiten drastisch verkürzt werden. Zudem können GitLab Subscriptions über Adfinis bezogen werden. Adfinis und GitLab Kunden profitieren also gleich auf zwei Ebenen von höherer Geschwindigkeit: technisch und administrativ

Warum GitLab und Adfinis?

Viele Organisationen sehen sich mit diversen Herausforderungen, die mit der zunehmenden Digitalisierung einhergehen, konfrontiert. Sie wollen ihre internen Development Prozesse automatisieren, dadurch ihre Time to Market minimieren und auf offene und transparente Tools setzen. Das breite Partnernetzwerk der Adfinis ermöglicht es, diese Herausforderungen gezielt und individuell mit ihren Kunden anzugehen. So brilliert GitLab in den Bereichen DevOps und DevSecOps vor allem im Zusammenspiel mit HashiCorp, SUSE und Red Hat Technologien, was Adfinis wahrlich zum Nährboden für Innovation gedeihen lässt.

Michael Moser, CCO bei Adfinis, weiss was die Kunden wollen “In den letzten Monaten haben wir bereits ein grosses Wachstum rund um DevSecOps beobachten können. Zusammen mit GitLab bieten wir unseren Kunden den Rundum-Service: von der Planung über die Implementation bis zum Betrieb.”

Adfinis setzt selber seit Jahren auf GitLab und ist der ideale Partner, um Unternehmen bei der Planung, der Einführung und dem Betrieb von GitLab in Ihrer IT-Organisation zu unterstützen.

“Mit Adfinis haben wir einen Partner, der wie die Faust aufs Auge passt. Sowohl die technische Ausrichtung, wie auch die Vision der Adfinis sind wie für die GitLab gemacht. Dank dem Einfallsreichtum und dem 24/7 Support der Adfinis, haben wir hier ein Fundament geschaffen, welches unseren Kunden Innovation auf allen Ebenen ermöglicht.” Ilaria Pazienza, Channel Sales Manager DACH, GitLab

Über Adfinis

Unsere Mission ist es, Open-Source-Technologien zu fördern, qualitativ hochwertige Arbeit zu liefern und unternehmenskritische Systeme rund um die Uhr zu betreiben, damit sich unsere Kunden auf ihr Kerngeschäft konzentrieren können. Durch die Zusammenarbeit mit uns werden Kunden vom Vendor lock-in befreit und sind ihrer Konkurrenz einen Schritt voraus.

Adfinis ist ein Dienstleistungsunternehmen mit Sitz in der Schweiz, den Niederlanden und Australien, das Kunden aus dem privaten und öffentlichen Sektor bei der Planung, der Implementierung und dem Betrieb von massgeschneiderten Infrastruktur- und Softwareprojekten unterstützt. Die Kernkompetenzen von Adfinis sind Open Source Engineering, 24/7 Managed Services und Softwareentwicklung.

Als Unternehmen gestalten wir eine Welt innovativer, nachhaltiger und widerstandsfähiger IT-Lösungen, die auf vertrauenswürdiger Open Source Technologie basieren, um das volle Potenzial unserer Kunden zu erschliessen.

Mehr Informationen zu Adfinis unter www.adfinis.com

If you’re looking to implement Terraform, are you aware that Terraform alone isn’t going to cut it? Sure, it’s a great tool, but have you thought about how it integrates with your IT landscape? And about retraining your operational engineers? To help you, we present 5 building blocks to really future-proof your infrastructure with a successful implementation of Terraform.

We’ve tried it all before, haven’t we: in order to reduce complexity, we introduce another piece of technology and think we’ve found the holy grail. Only to discover a few months later that the technology in itself is just another piece of equipment. You’re not going to repair any leaks just by having a wrench in your shed. However, it is a great tool if you know what you’re trying to achieve and if you know how to use it effectively.

The same’s true for HashiCorp’s Terraform. It is a great piece of equipment for managing your infrastructure, lean and mean. Nevertheless, if you just buy the tool, but have no clue how it best serves your business, you’ll be wasting your money. Which of course, is a shame, because the opportunities Terraform offers are a game-changer for any company wanting to safely provision, and easily manage and scale a multi-cloud infrastructure using only code.

But not to worry, we’ve got you covered. We offer you the five building blocks for successful implementation and use of any Terraform roll-out:

1. Coding your infrastructure with Terraform

It’s a bit of an open door of course, but in order to work with Terraform, you’ll need to learn how to work with it. Obviously, you could experiment yourself, it’s that easy; but if you want someone to show you the ropes, you can always follow our training. We’ll teach you how to build, change, and destroy infrastructure. But you won’t just learn how to work with the easy, human-readable configuration language, because the training will also cover the following aspects.

2. The role of testing in successful infrastructure as code

Thinking about testing before you embark on your infrastructure as code journey is important for two distinct reasons. First: your developers have a clear idea about the goals you want to achieve and the requirements the infrastructure needs to meet. They’ll be checkmarking their way through their work and that’ll be very satisfying. Secondly: you’ll make sure the written code actually does what it should do, and you’re ensuring that connected, previously written code isn’t negatively impacted.

3. Knowing what you did and when with GIT version control

It’s not only testing that ensures that your infrastructure as code works, we encourage you to use GIT for version control as well. GIT enables you to track the changes you’ve made to your code. This is essential if you want to know exactly what you have changed over time. Reverting to specific versions is also possible with GIT. When you’re working as a team on the code, GIT can combine changes made by multiple people, merging them into one version. In other words: GIT helps you keep control over your infrastructure as code.

4. How does Terraform land in your ecosystem?

Terraform is never a standalone product but is often integrated with other on-prem and cloud technologies. Therefore, before you deploy Terraform, you need to know its dependencies and how well it all works together. Plus: have you considered the open source nature of Terraform and your journey to the cloud? Terraform is not only a fine tool for managing your single-cloud infrastructure, but it’s also ideal for managing all your clouds. You’ll be able to match different workloads with different clouds, making it possible to build a vendor-independent infrastructure, ensuring that your company is agile, flexible and scalable.

5. Building a developers’ culture

Last, but certainly not least: when you’re adopting infrastructure as code and Terraform, you have to think about retraining your operational engineers and building a culture in which thinking in code is encouraged. For example: a machine is not a pet, but more like a herd of cattle. This means that you don’t manually manage each machine, but use code to manage all your machines. Future-proof your company by thinking like a developer, solving issues with code, and scaling up as you grow.

How we can help

These 5 building blocks will help you successfully implement Terraform and use it to achieve your goals. As we’ve said, our Terraform training will not only help you understand its syntax, it will help you with all the other steps as well: start with testing, learn how to use GIT, let it land in your ecosystem and build a developers’ culture. Are you ready to take the next step towards a future-proof infrastructure?